Deploy Sharepoint solutions without downtime... Really!

Hello my fellows, today I think it's time to get very serious about one MS product. Somehow will like it but most developer will hate it... SharePoint On-Premise.

Each developer has it's own preference to deploy the solution. Maybe complete manually with some click adventures, or fully automated with a devops Pipeline. But everyone know, if you deploy a solution, the IIS Server gets restartet, there is now way to go around this. So it seems that there will be no zero Downtime solution possible. But that's wrong. Let me explain how you can archive this.

Requirements

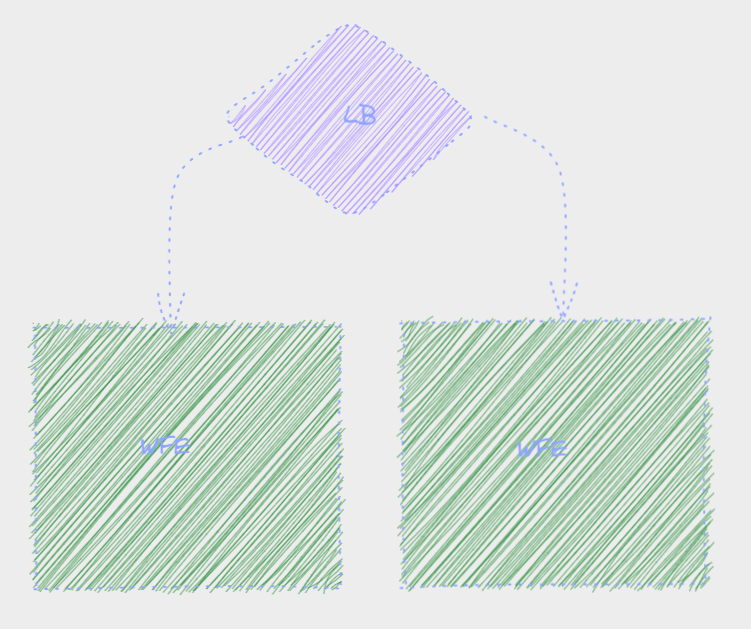

To make it clear, you cannot do a zero downtime deployment in a single server environment. You must have at least two Webfrontend (WFE) Servers. Usually, you have a load balancer configured o make a round robbin distribution of the request so that the load will be divided across all WFE-Servers.

For example, let's assume you have the following simple server structure

When a user now accesses the server, the LB checks if the WFEs are available. If true, then it will send the user's request to the first WFE, and the next request will be sent to the second WFE, the next to the first WFE ..... This procedure explains the round robbin procedure. The important part here is that the LB every time checks if the WFE is available.

So our requirements are

- Minimum 2 WFE's

- A LB with availability checks

The basic deployment

Basically, a deployment of a SharePoint solution will be done like this

Now the process starts, it will add some assembly to the GAC, and after this, it will send some data to the layouts folder and many more things. After that, the IIS pool will be recycled to get fresh assemblies. These processes will be done across all servers on the farm simultaneously.

The consequence is that every user will be kicked out from the server and maybe get frustrated because he did some work and it could not be saved in SharePoint.

Modified command

Now lets configure the command a little bit

When you execute this command, you will notice that the server (where you executed it) getting down, but the other server will be up. Why?

with the parameter -Local we tell SharePoint to install the new Solution only on the current server only, now you can controll the behaviour of the deployment process. Now the availability checks are important.

So in your example with two WFE you will execute on the first Server the update command with the local parameter. The Server getting down, the LB will notice this and redirecting all traffic to the second WFE. After the installation the server is up again and you can install the package on the seconde WFE. The LB will notice that the second will not be available anymore and redirects the users to the first server. So the user will not notice anything about a downtime because he will everytime gets redirected to the running server.

To warmup the SharePoint and get a faster response you will execute a GET-Request to the server and imitiate a User request to the WFE. After this the server will be reqdy for a fast response.

Conclusion

Now you are able to deply Solution to your farm without any downtime. Because you have a well configured Loadbalancer that will switch automatically between the instances. So your Deployment will concentrate on a single server, after the installation is complete, the next server will be updated. The loadbalancer then will switch between the available server. Sure in a Farm with many WFE Servers your can consider to deploy the solutions in groups. Try it out, you will see that it will get very handy, and support the DevOps lifecycle very much.