Success Story: How AI can help to rescue lives

I think this blog post is a very different one and a new type of Post. Not every reader will know this issue; it's not a very common issue. So, I must create the ground for this blog post.

It's a very sensitive post, and I think it is nice to know where AI helps. Not only does it summarize numbers together and increase the output or s.th, but it also helps with other tasks.

No, it is more like helping humans and rescuing lives. Yep, you read right—rescuing lives.

The Problem

Let's assume you are a non-native-speaking foreigner in a different country. English is also not the best-trained language. But you have had an accident. Who are you calling? Yes, the emergency call.

So the operator tries to get the following question answered

- Where are you?

- What has happened?

- Who is involved?

Sometimes, more, but these questions must be answered quickly, depending on the criticality of the accident. So, times matter.

Let's assume the operator cannot understand. I might try to get some colleagues on the phone to better understand, or you might have a noisy background or any other scenario.

It's hard for an operator to understand and get answers to the questions above.

Again! Time matters!

The Idea

Today, with AI being very popular and, in some cases, very helpful, it is time to use AI as a companion.

The Idea is that an AI will also listen to the phone call and identify the key points on demand, so the operator can review and acknowledge the data.

It is a real game-changer, especially for communication issues, and the operator can take action to send the firefighters, the ambulance, or both.

So, in fact, the following requirements are set:

- An AI must be fast enough to get a nearly real-time voice chat transcribed

- It will then create a summary (continuously written) on a tablet nearby

- It must be done in real time

- The collected data must be handled very secure and avoiding data leakage

The setup

First, there is no demolish environment; this system must be adaptively developed. In my opinion, there is only one solution. It must be connected to the VOIP as a listener. This has no effect only because one more muted listener is on the line.

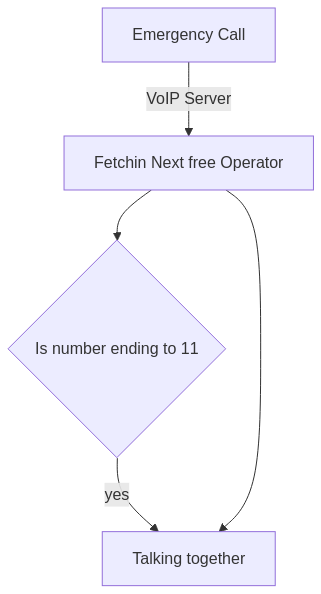

So, every time the system is active, it gets called. So we created a new calling plan like this:

The critical thing is that the AI may be down or otherwise inoperable, but it does not interfere with the operator's calling line. This process is bulletproof.

So, the setup and the calling line are set, and now it is time to talk about AI.

Which AI?

So we compared many AIs, and there are plenty of them. We thought about a local-hosted AI, but we lack the resources (Money and space) to host a local server for that, which can also scale out. We are coming out to Azure AI. Yep, there are many more providers, but I am very familiar with this, and the decision was made to this.

The Infrastructure

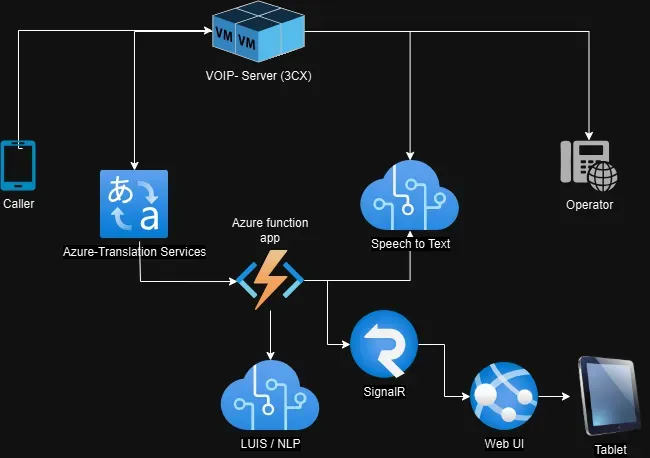

Let me enlist the used components

The Voip System (3CX)

This handles incoming calls, supports routings, and more. It also supports SIP and WebRTC.

I use SIP because it's a well-known standard for streaming audio, and the AI service does not need HD Audio.

Azure Speech to Text

Azure Speech to Text is a service that has the possibility to transcript spoken words in real time. It supports many languages, and it will set a solid base for getting the spoken word as text. This is required for further processing. But more on that later.

Azure conversational language Understanding

What's that? In short, it offers a tool to extract key facts from a spoken dialog.

This tool will extract the information to answer the question (set above). Extracting the relevant information will deliver me a technologically friendly output as JSON so that other services can work with them.

Azure AI Service

Azure Open AI Service is a standard service that hosts the others, too. This will connect everything, but we need more. We need an AI Service to advise the operator to display the right questions on the tablet. So, initially, we decided on a GPT-4o model, but it was not fast enough, so our discussion ended up with using the GPT-4 Turbo (with some custom instructions).

Azure Translator

The Azure translator Service is a component that will translate every spoken language into the target language in real time too.

Azure Webapp and SignalR

To get the real-time updates onto the tablet (without installing an app), I use a small React app that uses signalR for real-time communications with the server. The final infrastructure is designed like this:

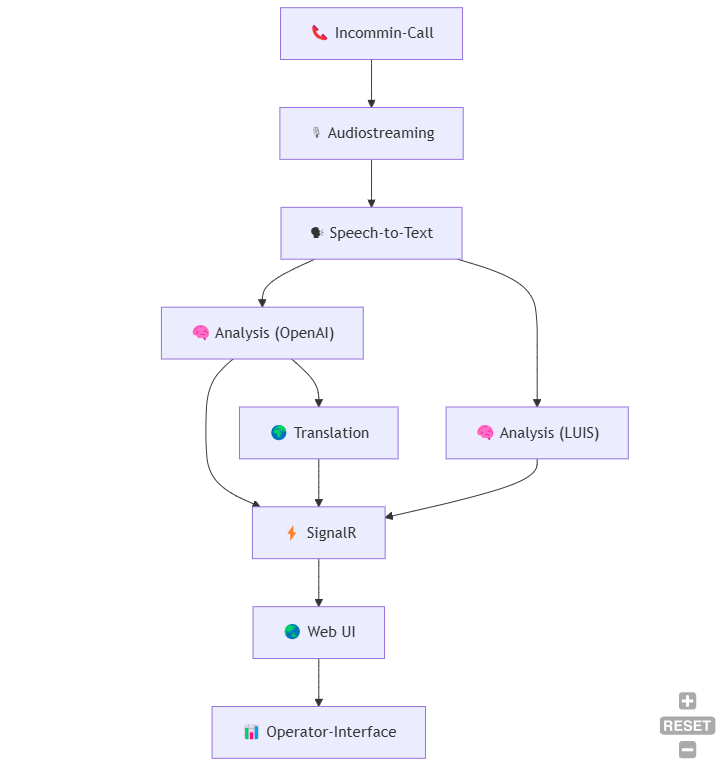

The Process

I will not show the full-blown Process. So I keep it very, very easy to follow.

First, there is the incoming call. This audio stream will be redirected from Audiostreaming (our Voip Server) using the SIP protocol to the Speech-to-Text service.

This will transcribe the caller's dialog ...monolog ( 😄 ). From now on, we can work with texts.

Now, this will be done a little bit asynchronously.

The text will be transferred to the Luis Service to extract relevant information from the spoken sentences. This information will be sent via Signal R to the web interface to show the operator the extracted data.

The other (parallel running) process will send it to the GPT Model, together with the question of how to get the information right from the caller. This answer will immediately be sent via signalR to the web UI itself.

So, that is the straightforward process of how the system works. Many facets and input data are involved, such as knowledge bases, GPS coordinates, etc. But I want to show here what AI can do when used correctly.

This system has a maximum latency of up to 500ms from spoken words to getting the information. Sometimes, it will take longer, up to one second.

Recap

This post was a new experience for me. Normally, I try to make a copy-paste-ready solution, but this one is very special, so it is not possible to get a solution-ready instance.

Anyway, I decided to share this.

"Why?" you ask.

I saw in different posts many use cases to get only funny things out of AI and only business-relevant things, and many (or all) of them were chat prompts. I am bored with the chat prompt. I think that AI won't help you with a prompt. It will help you in your process, so it must not be present, and you must not interact with an AI directly. I think this example is a good one that will support the operator in his /her calls.

If you have more questions about this topic, please contact me. I am also interested in your opinions. What do you think about this?